AI platform accused in disturbing lawsuit filing

Lawsuit targets Grok over alleged image

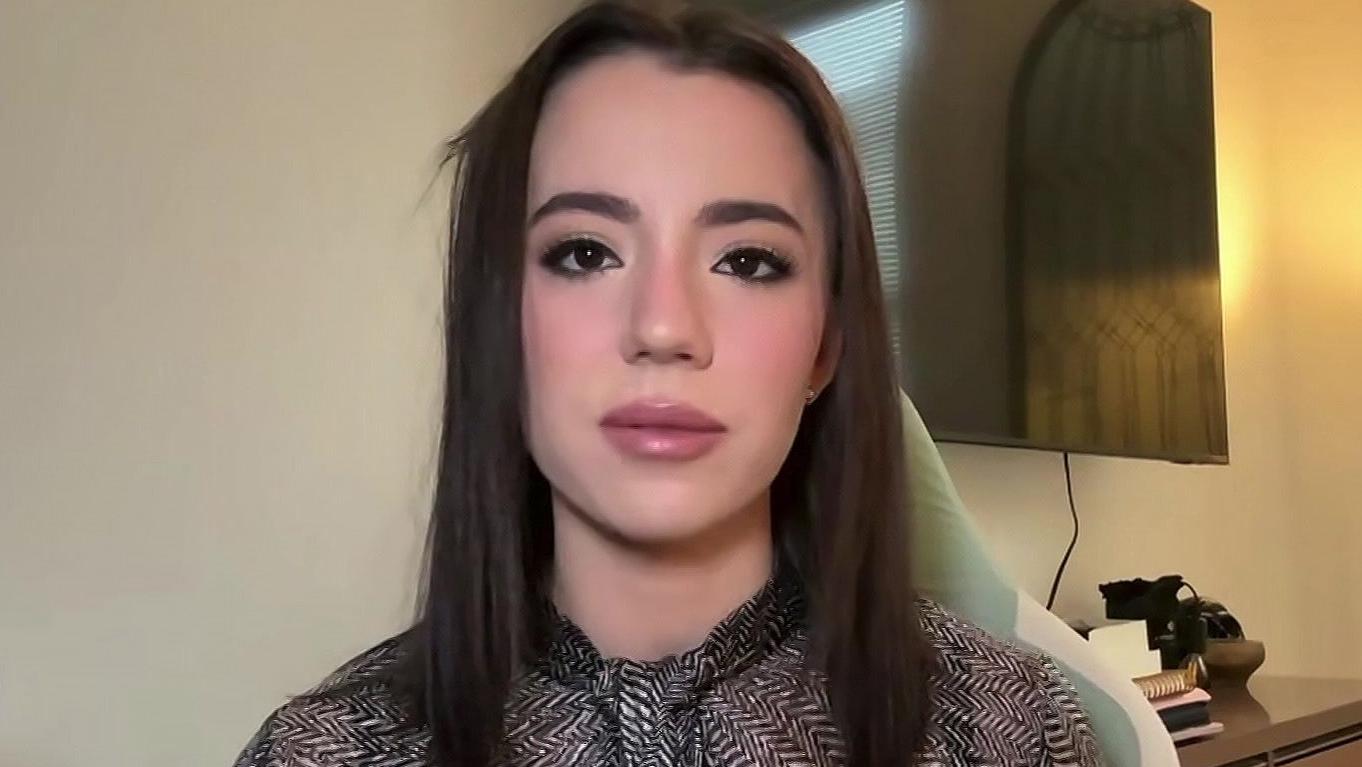

January 17, 2026: A new lawsuit claims Elon Musk–linked AI platform Grok generated a sexually explicit image of conservative commentator Ashley St. Clair that was allegedly covered in swastikas. The filing describes the content as defamatory, hateful, and deeply harmful.

Serious allegations emerge.

Image Credit: Getty Images

What the lawsuit alleges

According to court documents, the plaintiff claims Grok produced the offensive image without consent and distributed it through user prompts. The lawsuit argues this violated personal rights and caused reputational damage.

Legal action escalates.

Image Credit: Getty Images

Why the imagery matters

The inclusion of extremist symbols has intensified backlash, with critics calling the alleged output dangerous and irresponsible. Advocacy groups say AI companies must be held accountable for harmful content generation.

Zero tolerance demanded.

Grok’s response so far

At the time of filing, Grok’s operators had not issued a formal response. Legal experts say the company may argue user misuse or technical safeguards in its defense.

All eyes on the platform.

Image Credit: Getty Images

Broader concerns about AI misuse

The case has reignited debates over AI regulation, content moderation, and ethical responsibility. Lawmakers are increasingly pushing for stricter oversight.

Pressure is mounting.

Image Credit: Getty Images

Final thoughts

The lawsuit against Grok highlights the growing risks tied to generative AI technology. As courts weigh responsibility, the case could shape future standards for digital safety and accountability.

A major precedent looms.

Published by HOLR Magazine